Complete Guide On Fine-Tuning LLMs using RLHF

4.7 (424) In stock

Fine-tuning LLMs can help building custom, task specific and expert models. Read this blog to know methods, steps and process to perform fine tuning using RLHF

In discussions about why ChatGPT has captured our fascination, two common themes emerge:

1. Scale: Increasing data and computational resources.

2. User Experience (UX): Transitioning from prompt-based interactions to more natural chat interfaces.

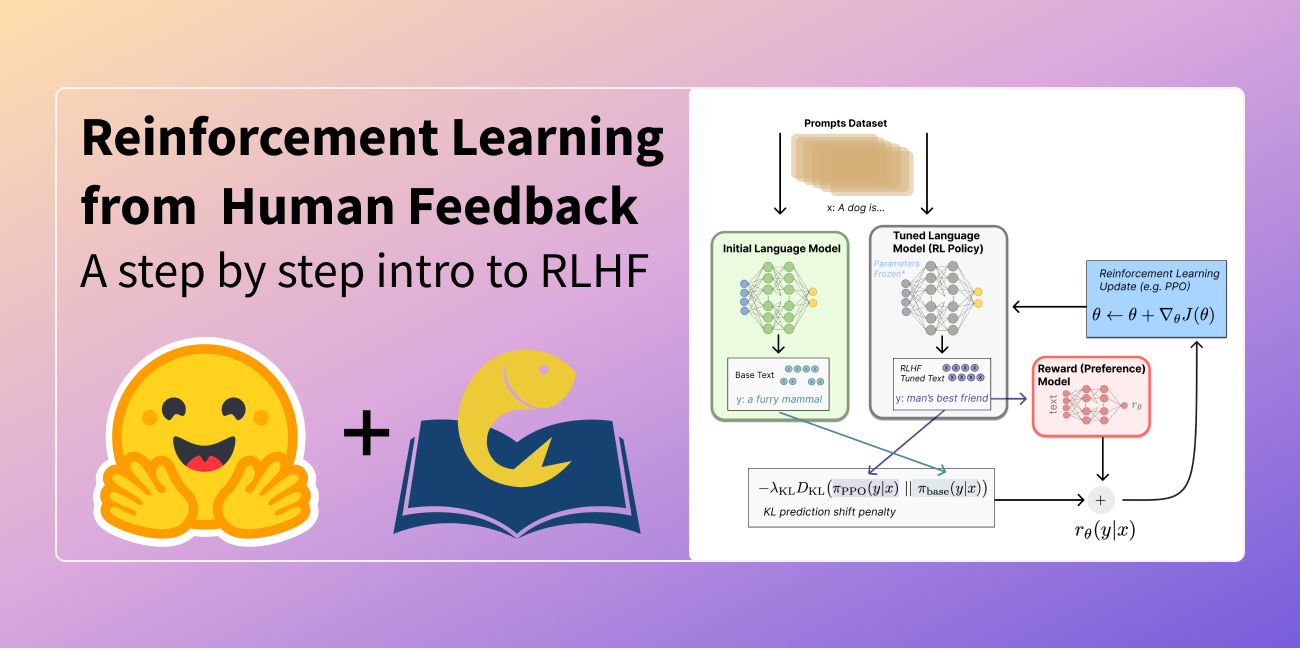

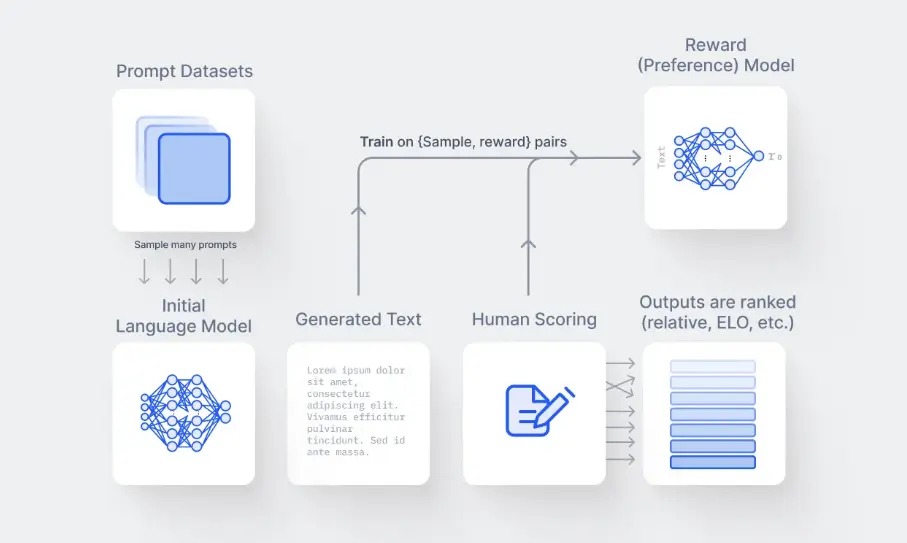

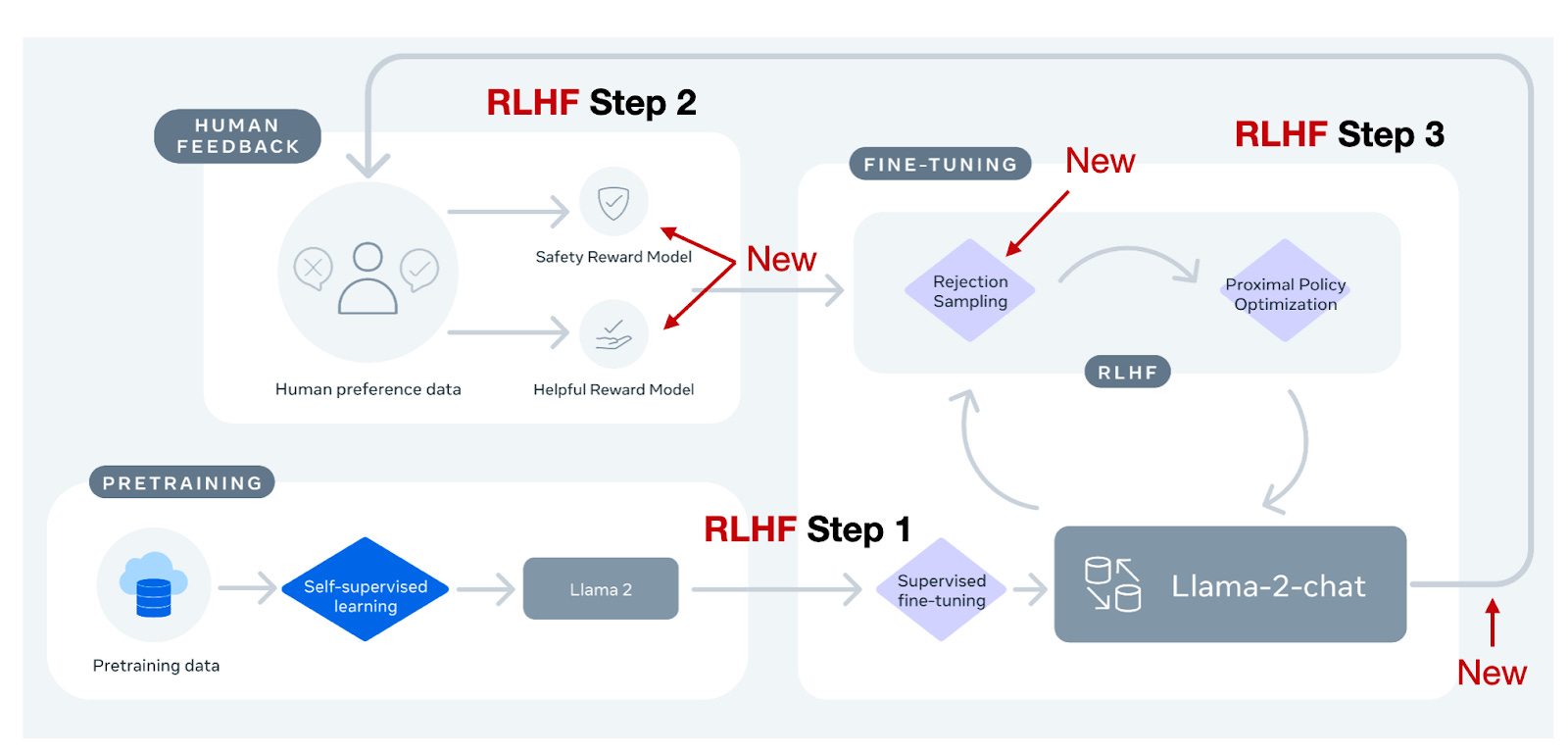

However, there's an aspect often overlooked – the remarkable technical innovation behind the success of models like ChatGPT. One particularly ingenious concept is Reinforcement Learning from Human Feedback (RLHF), which combines reinforcement learni

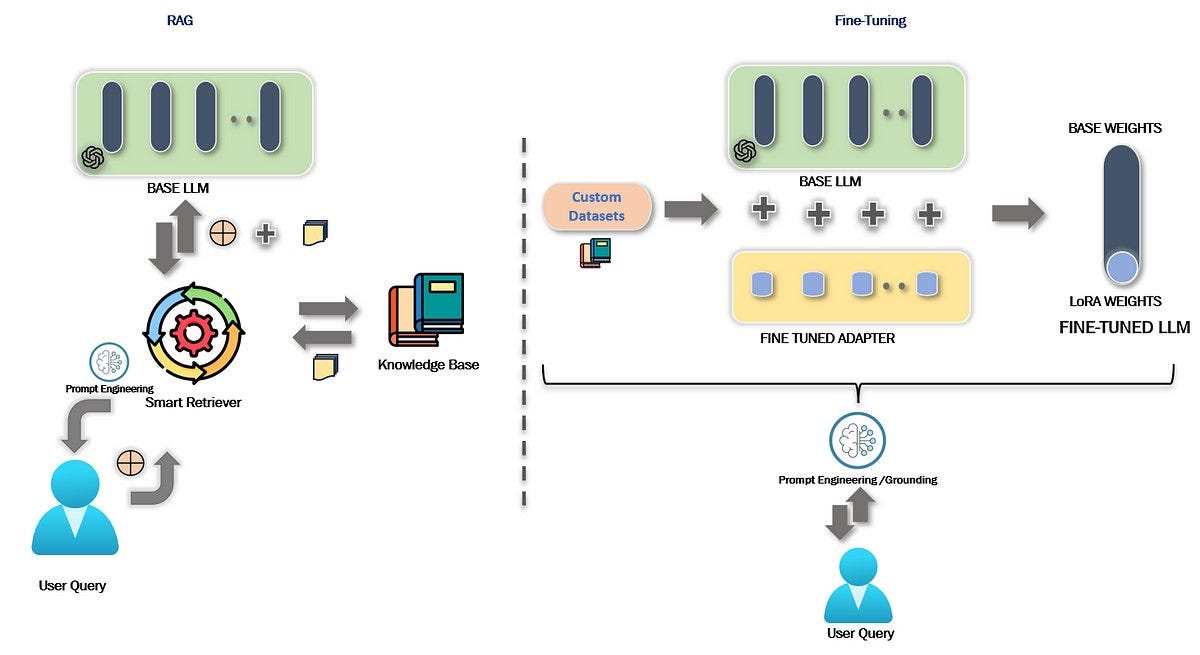

Finetuning an LLM: RLHF and alternatives (Part II), by Jose J. Martinez, MantisNLP

Improving LLM Performance - The Various Ways to Fine-Tune your LLMs !!, by Jacek Fleszar

Akshit Mehra - Labellerr

Akshit Mehra - Labellerr

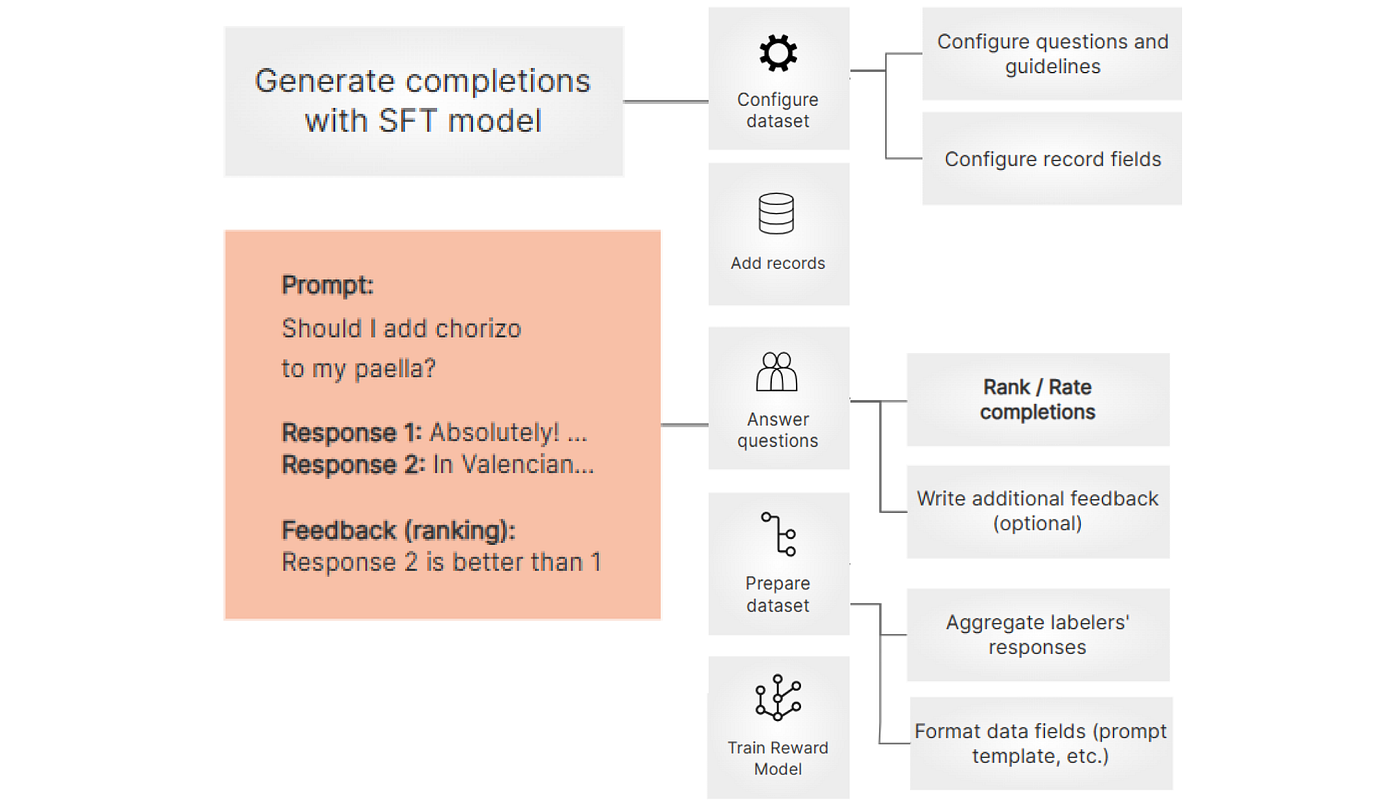

Building a Reward Model for Your LLM Using RLHF in Python, by Fareed Khan

Guide to RLHF LLMs in 2024: Benefits & Top Vendors

Improving your LLMs with RLHF on SageMaker

.png)

A Comprehensive Guide to fine-tuning LLMs using RLHF (Part-1)

Illustrating Reinforcement Learning from Human Feedback (RLHF)

Complete Guide On Fine-Tuning LLMs using RLHF

Complete Guide On Fine-Tuning LLMs using RLHF

substackcdn.com/image/fetch/f_auto,q_auto:good,fl_

A Beginner's Guide to Fine-Tuning Large Language Models

What is LLM Fine-Tuning? – Everything You Need to Know [2023 Guide]

Using LangSmith to Support Fine-tuning