Using LangSmith to Support Fine-tuning

4.5 (375) In stock

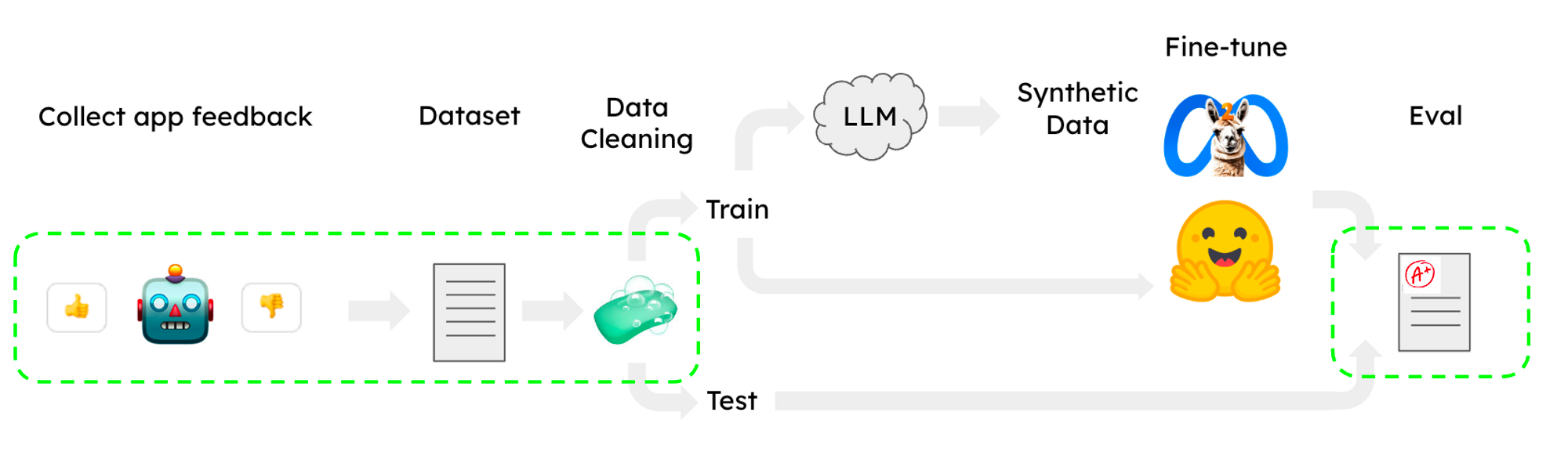

Summary We created a guide for fine-tuning and evaluating LLMs using LangSmith for dataset management and evaluation. We did this both with an open source LLM on CoLab and HuggingFace for model training, as well as OpenAI's new finetuning service. As a test case, we fine-tuned LLaMA2-7b-chat and gpt-3.5-turbo for an extraction task (knowledge graph triple extraction) using training data exported from LangSmith and also evaluated the results using LangSmith. The CoLab guide is here. Context I

🧩DemoGPT (@demo_gpt) / X

Applying OpenAI's RAG Strategies 和訳|p

Applying OpenAI's RAG Strategies - nikkie-memos

Applying OpenAI's RAG Strategies 和訳|p

Nicolas A. Duerr on LinkedIn: #karlsruhe #networking #learning #business

LangSaaS - No Code LangChain SaaS - Product Information, Latest Updates, and Reviews 2024

Using LangSmith to Support Fine-tuning

Multi-Vector Retriever for RAG on tables, text, and images 和訳|p

LangSaaS - No Code LangChain SaaS - Product Information, Latest Updates, and Reviews 2024

🧩DemoGPT (@demo_gpt) / X

LangChainのv0.0266からv0.0.276までの差分を整理(もくもく会向け)|mah_lab / 西見 公宏

Nicolas A. Duerr on LinkedIn: #innovation #ai #artificialintelligence #business

Fine-tuning: Unlocking the full potential of AI for businesses

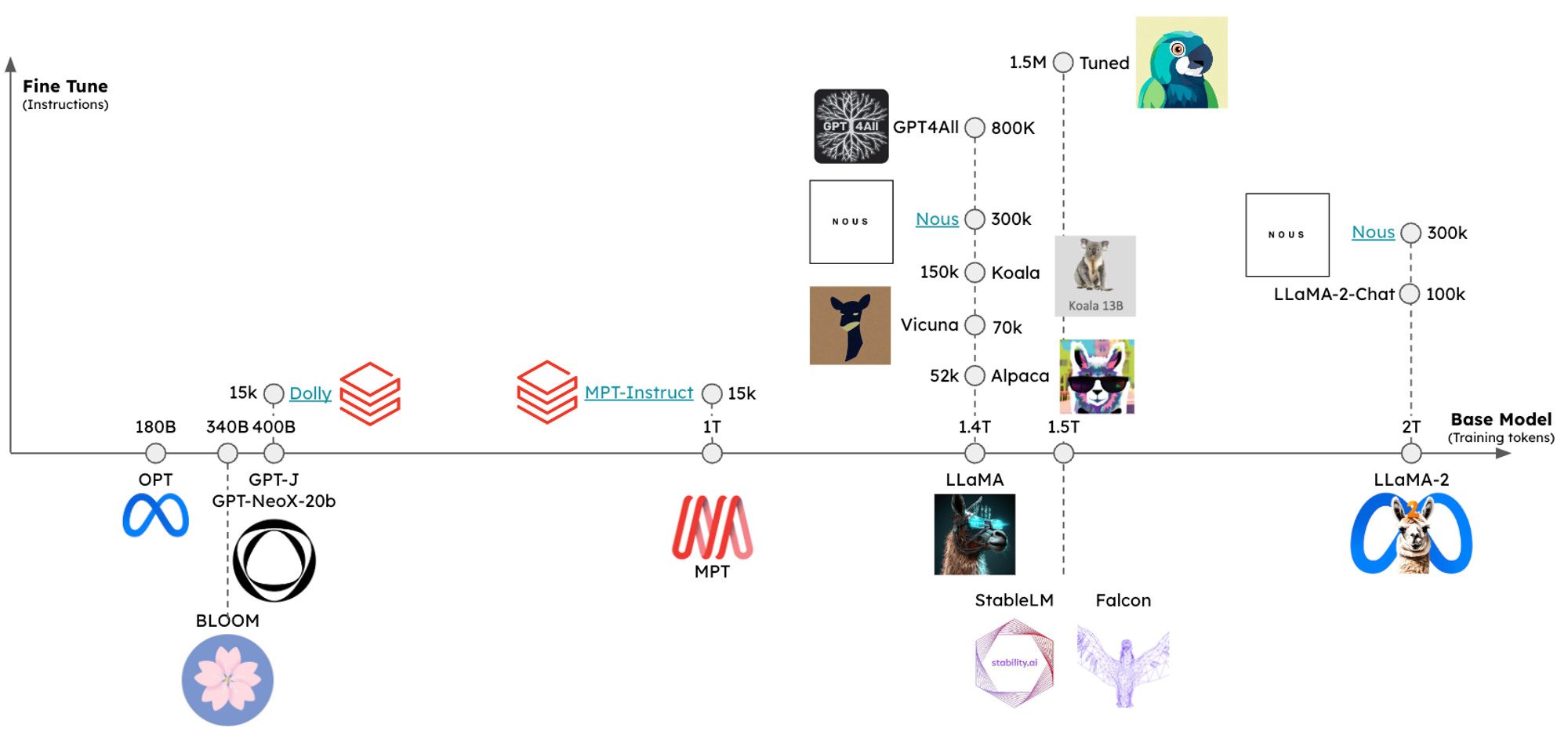

The complete guide to LLM fine-tuning - TechTalks

What's the Difference Between Fine-Tuning, Retraining, and RAG?

How to Fine-Tune ChatGPT for Specific Use-case - Shiksha Online