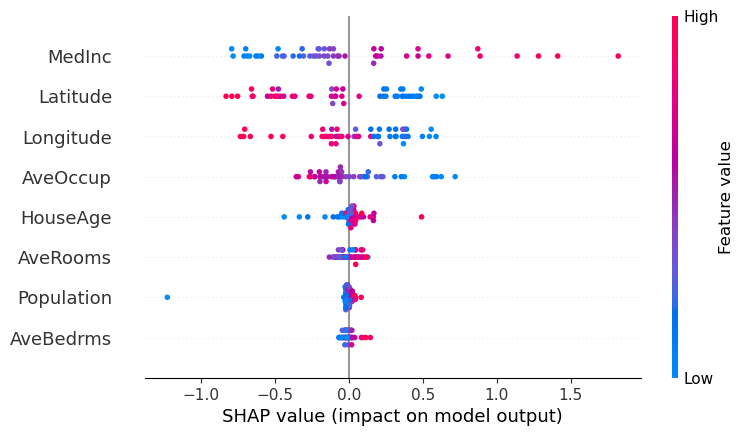

Using SHAP Values to Explain How Your Machine Learning Model Works, by Vinícius Trevisan

5 (118) In stock

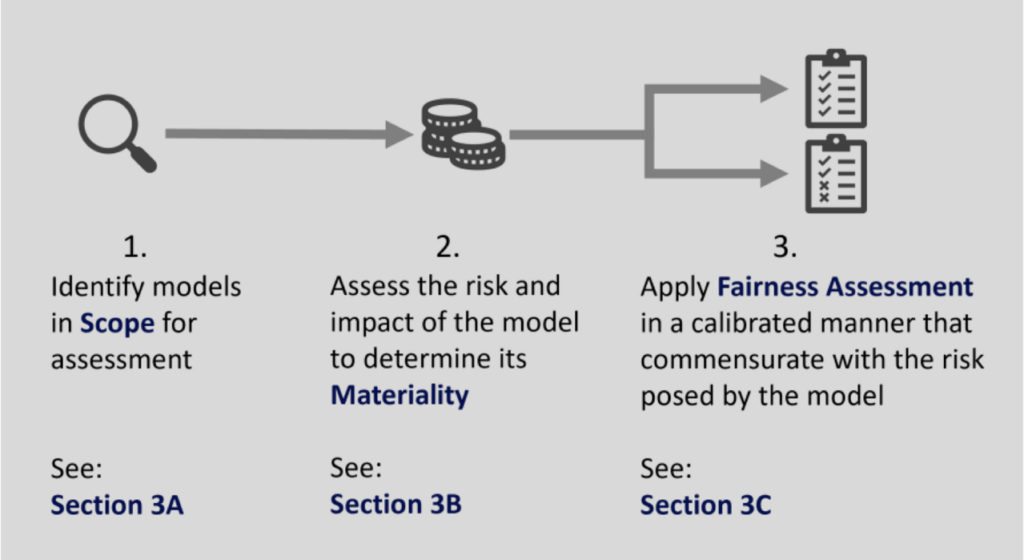

New Report: Risky Analysis: Assessing and Improving AI Governance Tools

Vinícius Trevisan – Medium

New Report: Risky Analysis: Assessing and Improving AI Governance Tools

List: Machine Learning, Curated by Julià Amengual

Is your ML model stable? Checking model stability and population drift with PSI and CSI, by Vinícius Trevisan

Comparing sample distributions with the Kolmogorov-Smirnov (KS) test, by Vinícius Trevisan

List: Feature Selection, Curated by Brian R Muckle

Is it correct to put the test data in the to produce the shapley values? I believe we should use the training data as we are explaining the model, which was configured

Vinícius Trevisan – Medium

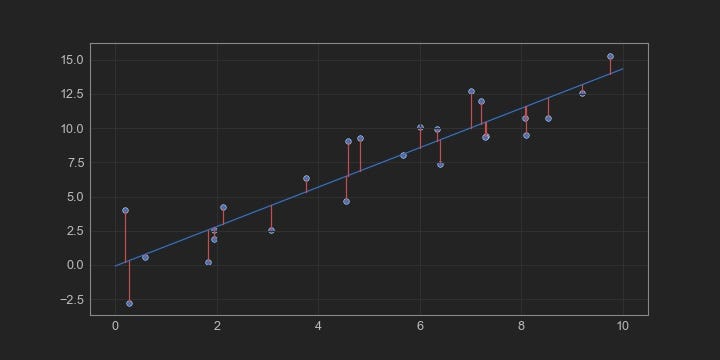

Comparing Robustness of MAE, MSE and RMSE, by Vinícius Trevisan

Comparing Robustness of MAE, MSE and RMSE, by Vinícius Trevisan

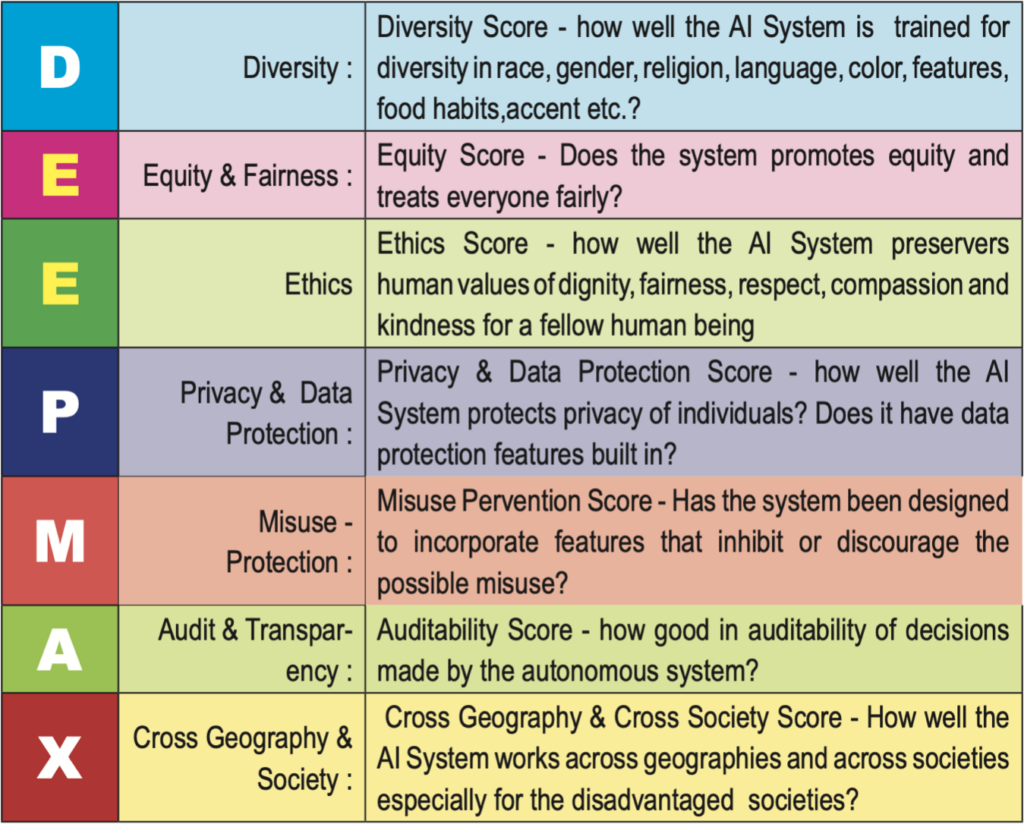

Introduction to Explainable AI (Explainable Artificial Intelligence or XAI) - 10 Senses

PDF) Machine Learning Methods for Quantifying Uncertainty in Prospectivity Mapping of Magmatic-Hydrothermal Gold Deposits: A Case Study from Juruena Mineral Province, Northern Mato Grosso, Brazil

All You Need to Know About SHAP for Explainable AI?, by Isha Choudhary

SHAP values for beginners What they mean and their applications

Compound Shapes: How to Find the Area of an L-Shape - Owlcation