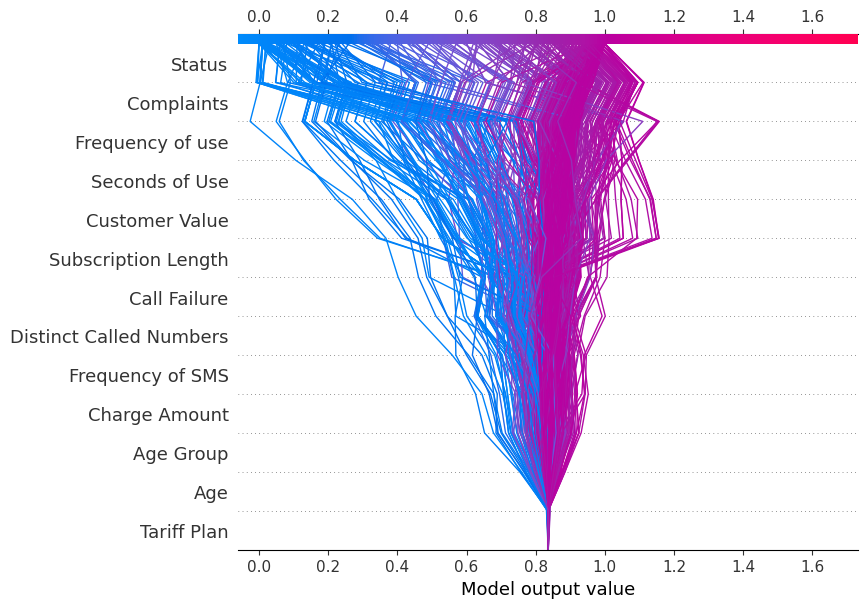

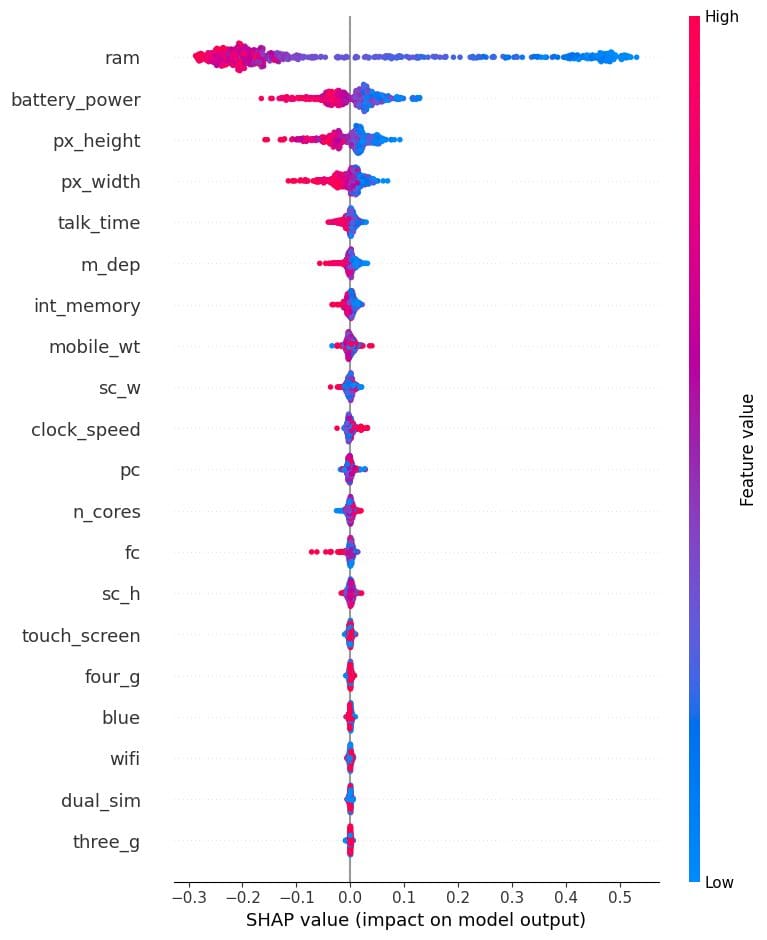

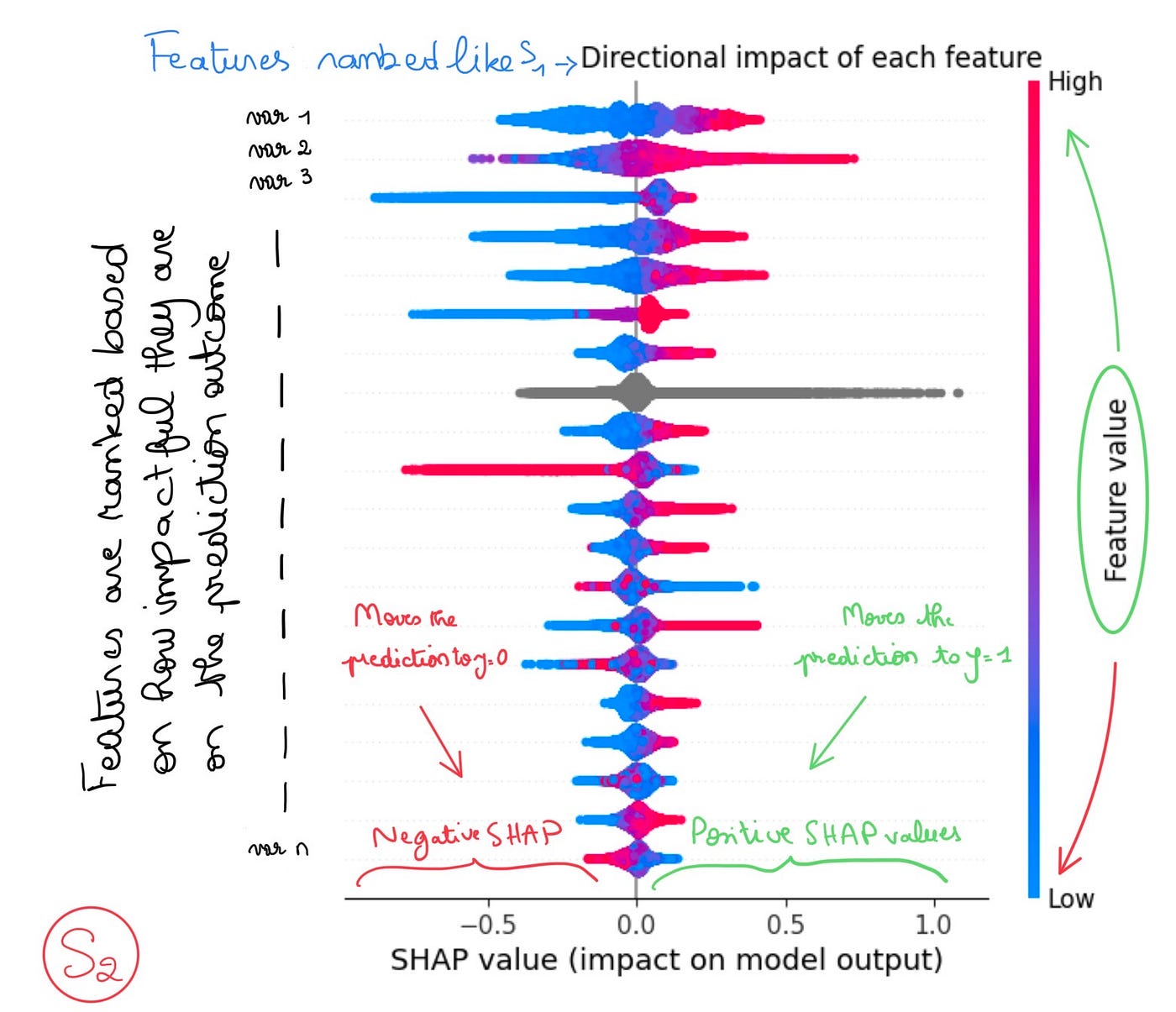

Feature importance based on SHAP-values. On the left side, the

4.7 (286) In stock

An Introduction to SHAP Values and Machine Learning Interpretability

Feature importance based on SHAP-values. On the left side, the

Jan BOONE, Professor (Associate), Associate Professor

Feature importance based on SHAP values. On the left side, (a), the

Using SHAP Values to Explain How Your Machine Learning Model Works, by Vinícius Trevisan

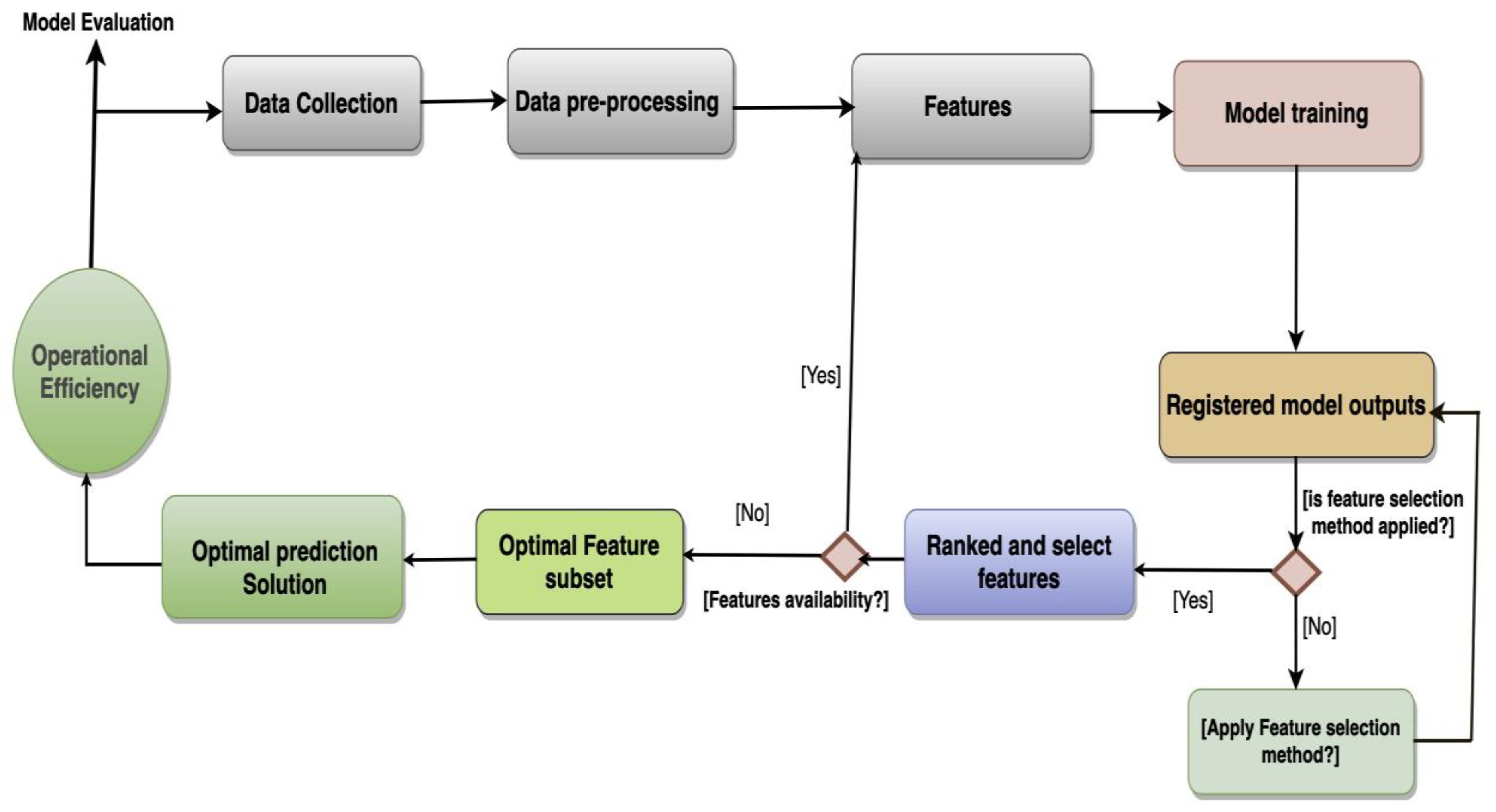

Future Internet, Free Full-Text

Feature importance based on SHAP-values. On the left side, the

Steven VERSTOCKT, Ghent University, Gent, UGhent

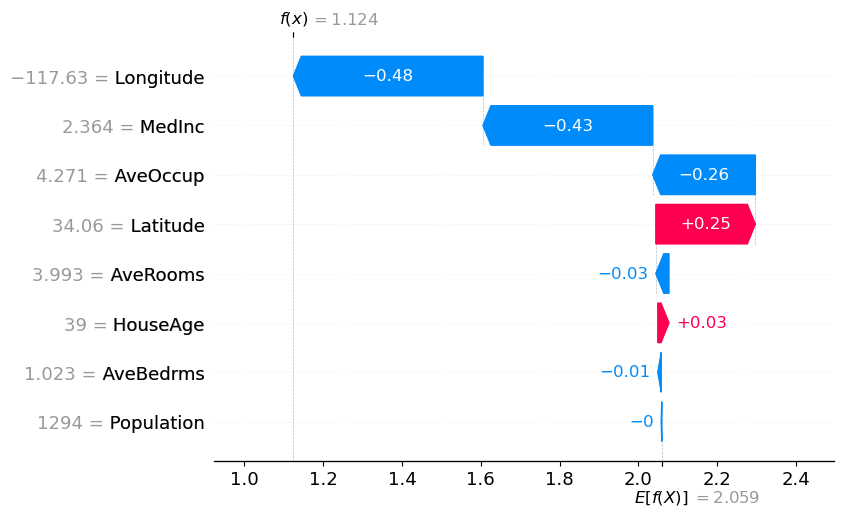

On the left, SHAP summary plot of the XGBoost model. The higher SHAP

Using SHAP Values for Model Interpretability in Machine Learning - KDnuggets

treeshap — explain tree-based models with SHAP values

Feature Importance Analysis with SHAP I Learned at Spotify (with the Help of the Avengers), by Khouloud El Alami

What Is Your Body Shape & The 5 Most Common Body Shapes – Style by

Teaching Oval Shape for Preschoolers: How To Draw & Examples

An introduction to explainable AI with Shapley values — SHAP latest documentation

A comparison of explainable artificial intelligence methods in the

Postpartum Belly Wrap 3 in 1 Support Recovery Belt - C Section Belly Band, Post Pregnancy Postnatal Maternity Girdles for Women Body Shaper - Post

Postpartum Belly Wrap 3 in 1 Support Recovery Belt - C Section Belly Band, Post Pregnancy Postnatal Maternity Girdles for Women Body Shaper - Post- Flat lay photography, Expert Tips

Shop Family Pyjama & Loungewear Sets - Cyberjammies

Shop Family Pyjama & Loungewear Sets - Cyberjammies GapFit Sculpt Compression Leggings M Gap fit, Clothes design, Compression leggings

GapFit Sculpt Compression Leggings M Gap fit, Clothes design, Compression leggings Tensor™ Women Slim Silhouette Back Support, Adjustable

Tensor™ Women Slim Silhouette Back Support, Adjustable Lyra Women's Non-Padded Sports BRA-531 Sports Bra 531_2PC_White & BABYPINK_M

Lyra Women's Non-Padded Sports BRA-531 Sports Bra 531_2PC_White & BABYPINK_M