DeepSpeed Compression: A composable library for extreme

5 (143) In stock

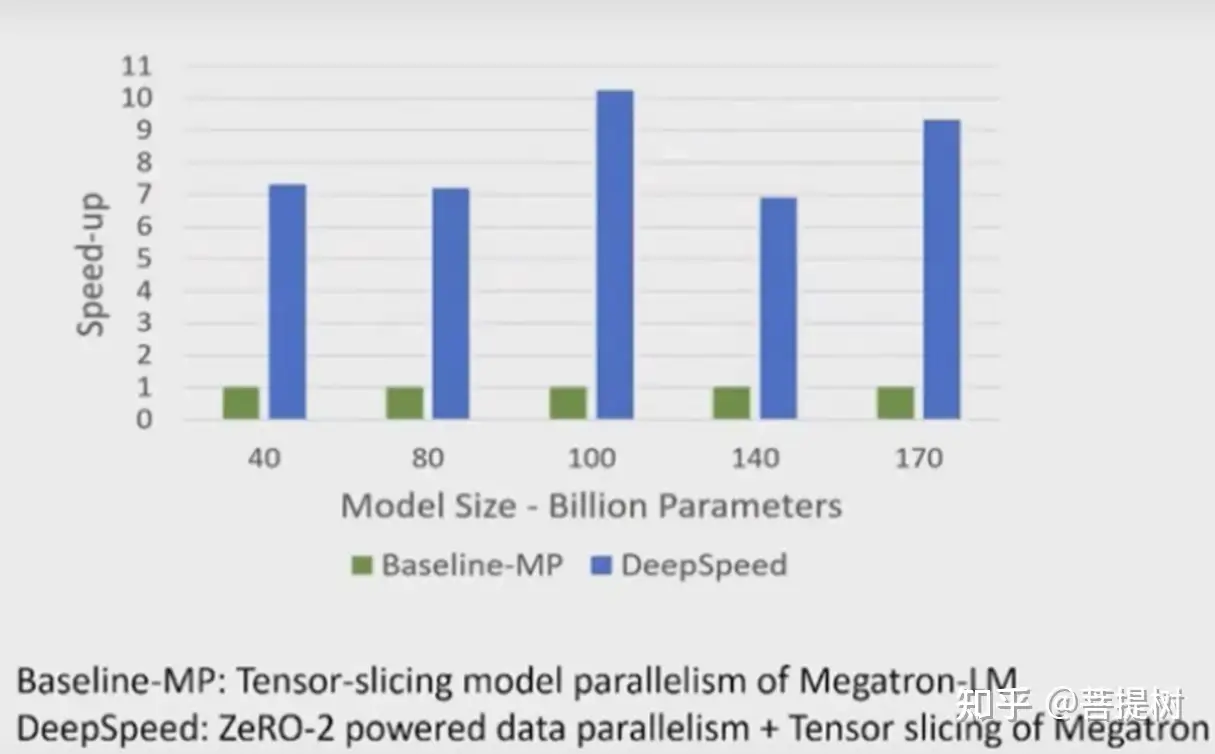

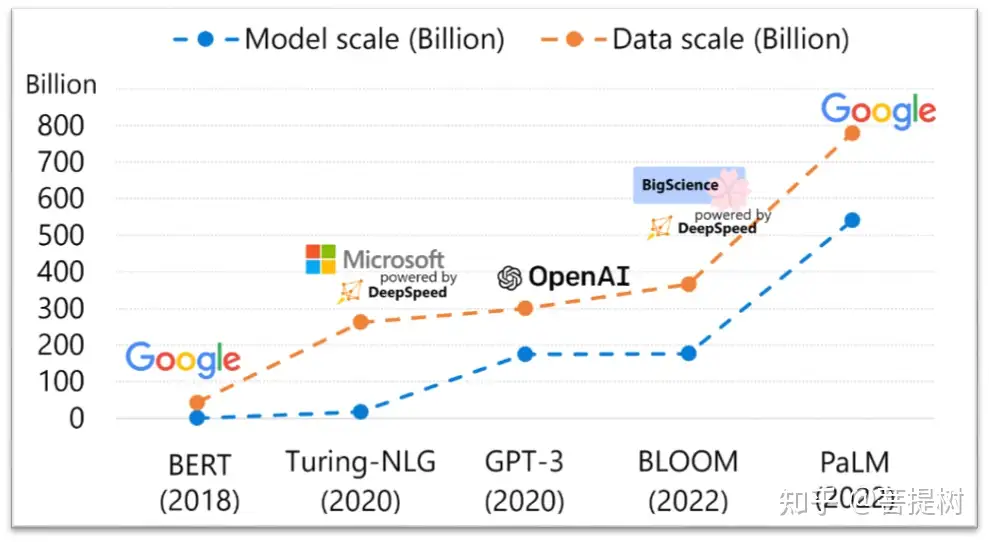

Large-scale models are revolutionizing deep learning and AI research, driving major improvements in language understanding, generating creative texts, multi-lingual translation and many more. But despite their remarkable capabilities, the models’ large size creates latency and cost constraints that hinder the deployment of applications on top of them. In particular, increased inference time and memory consumption […]

DeepSpeed Compression: A composable library for extreme compression and zero-cost quantization - Microsoft Research

Michel LAPLANE (@MichelLAPLANE) / X

DeepSpeed介绍- 知乎

DeepSpeed ZeRO++: A leap in speed for LLM and chat model training with 4X less communication - Microsoft Research

PDF] DeepSpeed- Inference: Enabling Efficient Inference of Transformer Models at Unprecedented Scale

Gioele Crispo on LinkedIn: Discover ChatGPT by asking it: Advantages, Disadvantages and Secrets

DeepSpeed介绍_deepseed zero-CSDN博客

DeepSpeed介绍- 知乎

Shaden Smith op LinkedIn: DeepSpeed Data Efficiency: A composable library that makes better use of…

Scaling laws for very large neural nets — The Dan MacKinlay stable of variably-well-consider'd enterprises

Jean-marc Mommessin, Author at MarkTechPost

deepspeed - Python Package Health Analysis

Zip File Compression and Algorithm Explained - Spiceworks

Compression Technique - an overview

Best reviews of 😉 Wacoal Slimline Seamless Minimizer Bra 85154 Sand (nude 5) 🎁

Best reviews of 😉 Wacoal Slimline Seamless Minimizer Bra 85154 Sand (nude 5) 🎁 Monmouth County holding two upcoming job fairs for the community

Monmouth County holding two upcoming job fairs for the community Black Womens Mardi Gras Dress With Purple Green and Gold Chest Stripes

Black Womens Mardi Gras Dress With Purple Green and Gold Chest Stripes Wireless Lace Nursing Bra For Plus Size Pregnant Women Sexy And

Wireless Lace Nursing Bra For Plus Size Pregnant Women Sexy And Cake Maternity Tutti Frutti B-Dd Cup Wire-Free Nursing Bra - Black - Curvy

Cake Maternity Tutti Frutti B-Dd Cup Wire-Free Nursing Bra - Black - Curvy A plate of ice silk non-trace without rims together big yards condole female bra underwear wholesale beauty gather back movement - AliExpress

A plate of ice silk non-trace without rims together big yards condole female bra underwear wholesale beauty gather back movement - AliExpress