Fine-tuning a GPT — Prefix-tuning, by Chris Kuo/Dr. Dataman

4.6 (168) In stock

In this and the next posts, I will walk you through the fine-tuning process for a Large Language Model (LLM) or a Generative Pre-trained Transformer (GPT). There are two prominent fine-tuning…

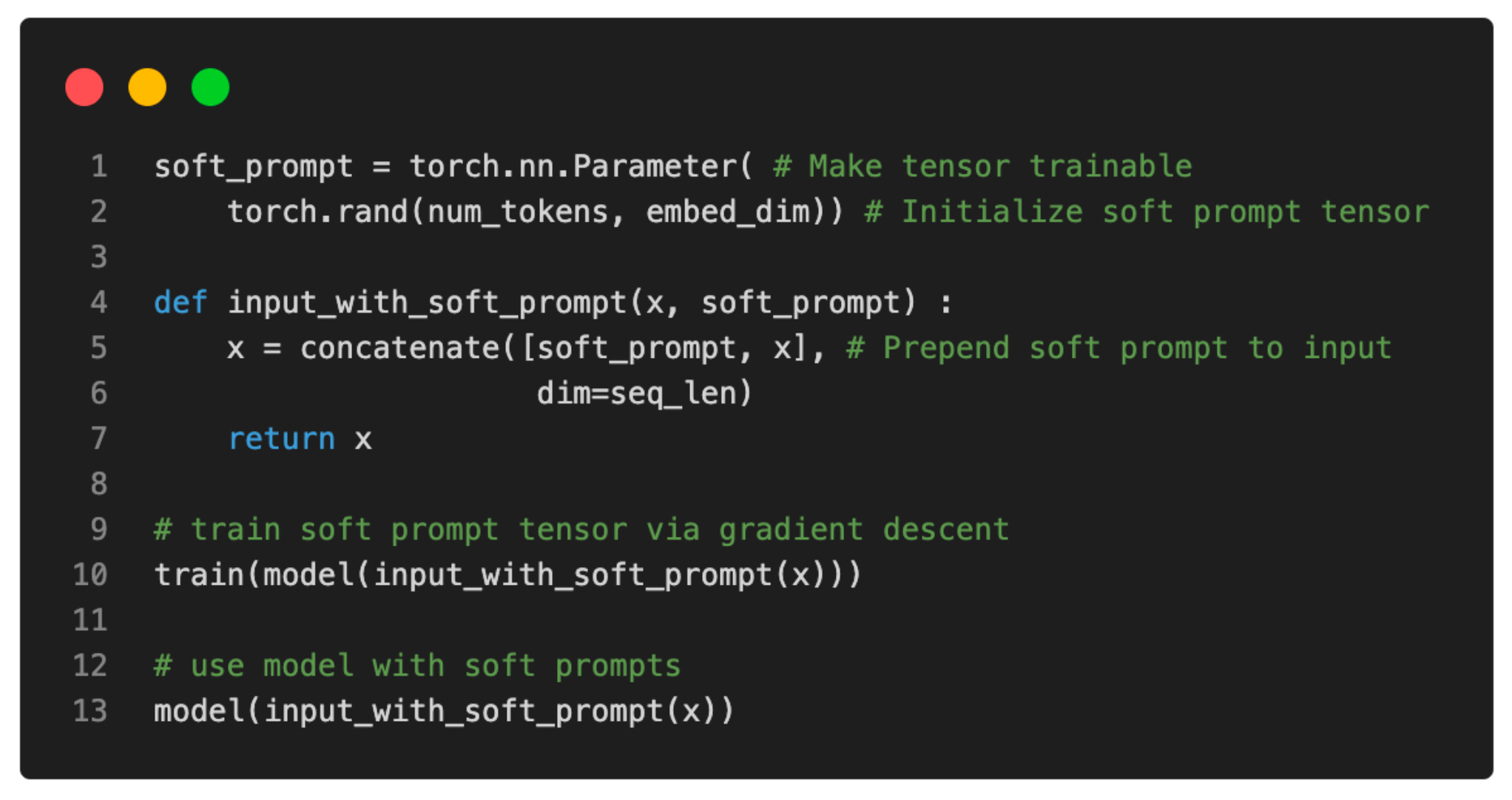

Understanding Parameter-Efficient LLM Finetuning: Prompt Tuning

List: Gpt, Curated by Jack Crowley

Classes as Priors: a simple model of Bayesian conditioning for

List: GPT_LALM_MMLU, Curated by Shashank Sahoo

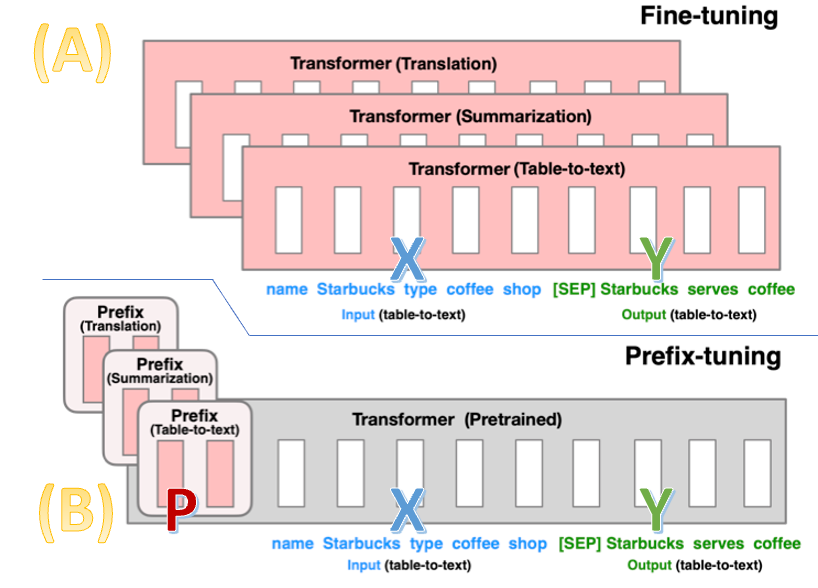

Alternative for fine-tuning? Prefix-tuning may be your answer

Understanding Parameter-Efficient LLM Finetuning: Prompt Tuning And Prefix Tuning

What is the specification for: 'num_virtual_tokens:20? @chris

Parameter Efficient Fine, PDF

Understanding Parameter-Efficient LLM Finetuning: Prompt Tuning

The data that those large language models were built on

Chris Kuo/Dr. Dataman – Medium

A Tutorial on the Open-source Lag-Llama for Time Series

Fine-tuning GPT-4 to produce user friendly data explorations (r

Unlock the Power of Fine-Tuning Pre-Trained Models in TensorFlow

The LLM Triad: Tune, Prompt, Reward - Gradient Flow

RAG Vs Fine-Tuning Vs Both: A Guide For Optimizing LLM Performance

Fine-tuning vs RAG: An opinion and comparative analysis

How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

MilliporeSigma™ Supelco™ Swagelok™ Connector to Female NPT, 200-7

MilliporeSigma™ Supelco™ Swagelok™ Connector to Female NPT, 200-7 Crotchless Red Pearl Panties, crotchless panties, crotchless panties f – La Belle Fantastique

Crotchless Red Pearl Panties, crotchless panties, crotchless panties f – La Belle Fantastique Women's Buck Naked Brief Underwear

Women's Buck Naked Brief Underwear Miracle Body Shaper And Buttock Lifter Enhancer Fake ASS Butt

Miracle Body Shaper And Buttock Lifter Enhancer Fake ASS Butt Amor 'e coro: Luxury Italian Lingerie with a Fine French Touch - Lingerie Briefs ~ by Ellen Lewis

Amor 'e coro: Luxury Italian Lingerie with a Fine French Touch - Lingerie Briefs ~ by Ellen Lewis Contrast Lace Push Bra Scallop Trim Bow Tie Colorblock Bra - Temu

Contrast Lace Push Bra Scallop Trim Bow Tie Colorblock Bra - Temu