Pre-training vs Fine-Tuning vs In-Context Learning of Large

4.7 (407) In stock

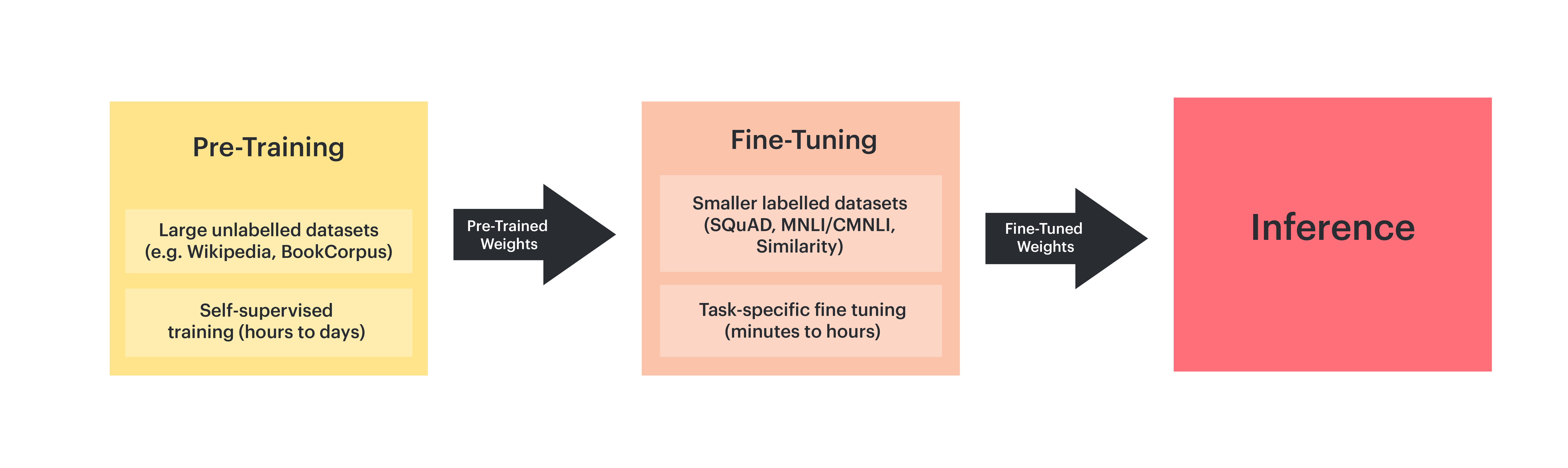

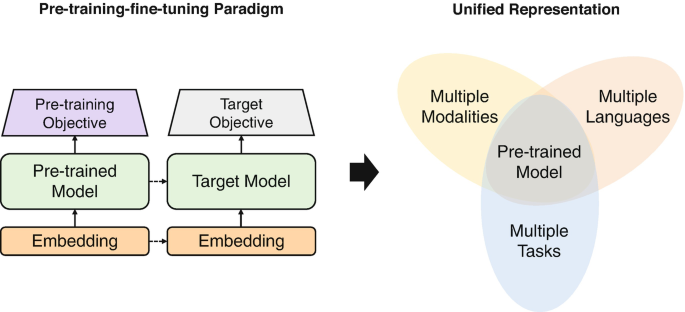

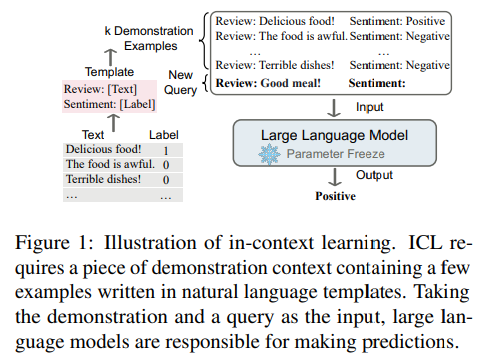

Large language models are first trained on massive text datasets in a process known as pre-training: gaining a solid grasp of grammar, facts, and reasoning. Next comes fine-tuning to specialize in particular tasks or domains. And let's not forget the one that makes prompt engineering possible: in-context learning, allowing models to adapt their responses on-the-fly based on the specific queries or prompts they are given.

1. Introduction — Pre-Training and Fine-Tuning BERT for the IPU

The complete guide to LLM fine-tuning - TechTalks

Pre-trained Models for Representation Learning

All You Need to Know about In-Context Learning, by Salvatore Raieli

Mastering Generative AI Interactions: A Guide to In-Context Learning and Fine-Tuning

Pre-training vs Fine-Tuning vs In-Context Learning of Large

Parameter-efficient fine-tuning of large-scale pre-trained language models

Fine-Tuning AI Models with Your Organization's Data: A

Fine-Tuning Insights: Lessons from Experimenting with RedPajama

D6 TIPS - AF Fine-Tuning, Technical Solutions

RAG vs Finetuning - Your Best Approach to Boost LLM Application.

RAG Vs Fine-Tuning Vs Both: A Guide For Optimizing LLM Performance - Galileo

Watch Lip Service

Watch Lip Service KIJBLAE Savings Womens Criss Cross Shirt Long Sleeve Henley V-Neck Shirt Casual Solid Loose Thermal Tunic Tops Blue M

KIJBLAE Savings Womens Criss Cross Shirt Long Sleeve Henley V-Neck Shirt Casual Solid Loose Thermal Tunic Tops Blue M Is Chair Yoga Good for Weight Loss? Tips, Exercises, and More

Is Chair Yoga Good for Weight Loss? Tips, Exercises, and More 16 of Pinterest's Best Summer Outfit Ideas

16 of Pinterest's Best Summer Outfit Ideas Underwear Modeling Compression High Waisted Women's Reduces 1 Size Controlbody

Underwear Modeling Compression High Waisted Women's Reduces 1 Size Controlbody The Long Sleeve Corset: Jonathan Simkhai Standard Shay Rib Bustier

The Long Sleeve Corset: Jonathan Simkhai Standard Shay Rib Bustier